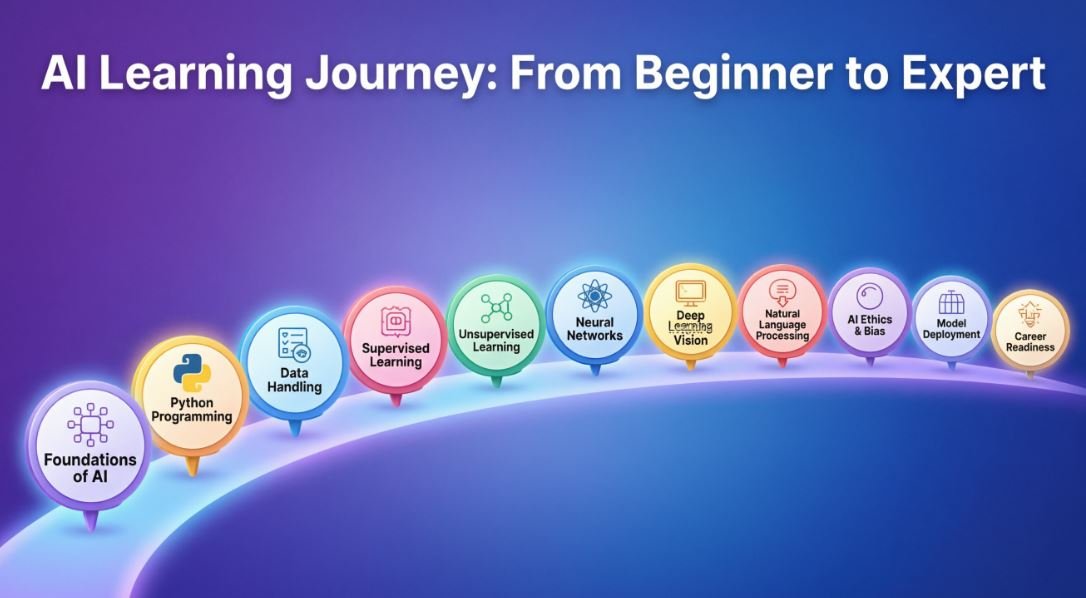

🤖 Complete Beginner’s Journey to Artificial Intelligence

Your Step-by-Step Guide to Mastering AI from Zero to Hero

🎯 Welcome to Your AI Journey!

Welcome to the most exciting technological revolution of our time! This comprehensive guide will take you from complete beginner to confident AI practitioner. Whether you’re an aspiring AI engineer, a professional seeking to upskill, or simply curious about artificial intelligence, this journey is designed specifically for you.

What You’ll Learn:

- Fundamental AI concepts and terminology

- Machine Learning and Deep Learning techniques

- Natural Language Processing and Large Language Models

- Python programming for AI development

- Popular AI frameworks (TensorFlow, PyTorch, LangChain)

- Ethical AI principles and responsible development

- Real-world AI applications across industries

- Career pathways and interview preparation

Understanding AI Fundamentals

Beginner TheoryWhat is Artificial Intelligence?

Artificial Intelligence is the science of creating machines that can mimic human intelligence—thinking, learning, problem-solving, and decision-making autonomously.

The AI Hierarchy: Understanding the Relationship

Artificial Intelligence (Broad Field) → The umbrella term for making machines intelligent

↳ Machine Learning (Subset) → Enables learning from data without explicit programming

↳ Deep Learning (Subset) → Uses neural networks to solve complex problems

Types of AI

| Type | Description | Status |

|---|---|---|

| Narrow AI (ANI) | Specialized for specific tasks (e.g., Siri, recommendation systems) | ✅ Currently Available |

| General AI (AGI) | Human-level intelligence across all domains | 🔬 Under Research |

| Super AI (ASI) | Surpasses human intelligence in all aspects | 🔮 Theoretical |

Key AI Domains

🧠 Machine Learning

Algorithms that learn patterns from data to make predictions

👁️ Computer Vision

Teaching machines to interpret and understand visual information

💬 Natural Language Processing

Enabling machines to understand and generate human language

🤖 Robotics

Creating intelligent machines that interact with the physical world

Real-World AI Applications

- Virtual Assistants: Siri, Alexa, Google Assistant

- Recommendation Systems: Netflix, YouTube, Amazon

- Autonomous Vehicles: Self-driving cars

- Healthcare: Medical diagnosis, drug discovery

- Finance: Fraud detection, algorithmic trading

- Content Creation: ChatGPT, MidJourney, DALL-E

Machine Learning Fundamentals

Beginner Machine LearningWhat is Machine Learning?

Machine Learning enables computers to learn from data and improve their performance without being explicitly programmed for every scenario. Instead of writing rules, we provide examples, and the algorithm discovers patterns.

Three Main Types of Machine Learning

1. Supervised Learning

Concept: Learning from labeled data (input-output pairs)

How it works: The algorithm learns the relationship between inputs and known outputs, then predicts outputs for new inputs.

Examples:

- Classification: Email spam detection (spam vs. not spam)

- Regression: House price prediction based on features

- Image Recognition: Identifying cats vs. dogs in photos

2. Unsupervised Learning

Concept: Finding hidden patterns in unlabeled data

How it works: The algorithm explores data structure without predefined categories.

Examples:

- Clustering: Customer segmentation for marketing

- Dimensionality Reduction: Compressing data while preserving information

- Anomaly Detection: Identifying unusual patterns (fraud detection)

3. Reinforcement Learning

Concept: Learning through trial and error with rewards and penalties

How it works: An agent interacts with an environment, receives feedback (rewards/penalties), and learns optimal actions to maximize cumulative rewards.

Examples:

- Game Playing: AlphaGo, Chess engines

- Robotics: Teaching robots to walk or manipulate objects

- Autonomous Driving: Learning optimal driving behaviors

The Machine Learning Workflow

Clearly define what you want to predict or classify

Gather relevant, quality data for your problem

Clean, normalize, and split data (training/testing sets)

Choose appropriate algorithm (decision trees, neural networks, etc.)

Feed training data to the algorithm to learn patterns

Test model performance on unseen data

Fine-tune hyperparameters to improve performance

Deploy model to production for real-world use

Common ML Challenges

⚠️ Overfitting

Problem: Model performs well on training data but poorly on new data (it memorized instead of learned)

Solutions:

- Use more training data

- Simplify the model (reduce complexity)

- Apply regularization techniques

- Use cross-validation

- Early stopping during training

⚠️ Underfitting

Problem: Model is too simple to capture patterns (poor performance on both training and test data)

Solutions:

- Use more complex models

- Add more relevant features

- Reduce regularization

- Train longer

Deep Learning & Neural Networks

Intermediate Deep LearningWhat is Deep Learning?

Deep Learning is a subset of machine learning that uses artificial neural networks with multiple layers (hence “deep”) to automatically learn hierarchical representations of data. It excels at handling complex, high-dimensional data like images, audio, and text.

Why Deep Learning Revolutionized AI

- Automatic Feature Extraction: No manual feature engineering needed

- Handles Complex Data: Excels with images, audio, video, and text

- Scales with Data: Performance improves with more data

- End-to-End Learning: Learns directly from raw data to output

Neural Networks: The Building Blocks

The Perceptron (Simplest Neural Unit)

A perceptron takes multiple inputs, multiplies each by a weight, sums them up, adds a bias, and passes the result through an activation function to produce an output.

Key Components of Neural Networks

| Component | Purpose |

|---|---|

| Input Layer | Receives raw data features |

| Hidden Layers | Process and transform data (multiple layers = “deep”) |

| Output Layer | Produces final predictions |

| Weights & Biases | Learnable parameters adjusted during training |

| Activation Functions | Introduce non-linearity (ReLU, Sigmoid, Tanh) |

| Loss Function | Measures prediction error |

| Optimizer | Updates weights to minimize loss (SGD, Adam) |

The Training Process: Backpropagation

Types of Neural Networks

1. Convolutional Neural Networks (CNNs)

Best For: Image and video processing

Key Feature: Convolutional layers that detect spatial patterns (edges, textures, objects)

Applications:

- Image classification and recognition

- Object detection and segmentation

- Facial recognition

- Medical image analysis

2. Recurrent Neural Networks (RNNs)

Best For: Sequential data (time series, text, speech)

Key Feature: Memory of previous inputs through feedback connections

Limitation: Struggles with long sequences (vanishing gradient problem)

Solution: LSTM (Long Short-Term Memory) and GRU networks handle long-range dependencies

Applications:

- Language translation

- Speech recognition

- Time series forecasting

- Text generation

Regularization Techniques

Preventing Overfitting in Deep Networks

- Dropout: Randomly deactivate neurons during training to prevent co-adaptation

- L1/L2 Regularization: Add penalty for large weights to loss function

- Batch Normalization: Normalize layer inputs to stabilize training

- Data Augmentation: Create variations of training data (rotation, flipping, etc.)

- Early Stopping: Stop training when validation performance stops improving

Hands-On: Building Your First Neural Network

Natural Language Processing & Large Language Models

Intermediate NLPWhat is Natural Language Processing?

NLP enables computers to understand, interpret, and generate human language. It bridges the gap between human communication and machine understanding.

Core NLP Concepts

Text Preprocessing Techniques

Tokenization

Breaking text into individual words, phrases, or symbols (tokens)

Stemming

Reducing words to their root form by removing suffixes

Lemmatization

Converting words to their dictionary form (lemma) using vocabulary and grammar

Stop Words Removal

Filtering out common words that don’t add meaning

Document-Term Matrix

A mathematical representation of text where rows represent documents and columns represent unique terms, with values indicating term frequency or importance.

NLP Applications

- Sentiment Analysis: Determining emotional tone of text (positive/negative/neutral)

- Chatbots & Virtual Assistants: Conversational AI systems

- Machine Translation: Google Translate, DeepL

- Text Summarization: Automatic summary generation

- Named Entity Recognition: Identifying people, places, organizations in text

- Question Answering: Retrieving answers from text databases

Large Language Models (LLMs)

What Makes LLMs Revolutionary?

Large Language Models are deep learning models trained on massive text datasets that can understand context, generate human-like text, answer questions, write code, and much more.

Key Characteristics:

- Scale: Billions of parameters (GPT-4, Claude, Gemini)

- Transformer Architecture: Uses attention mechanisms to understand relationships between words

- Pre-training + Fine-tuning: Learned on vast text, then specialized for tasks

- Few-shot Learning: Can perform new tasks with minimal examples

- Multimodal Capabilities: Process text, images, code, and more

Popular LLMs

| Model | Developer | Key Features |

|---|---|---|

| GPT-4 | OpenAI | Advanced reasoning, multimodal, creative writing |

| Claude | Anthropic | Long context, helpful and harmless, constitutional AI |

| Gemini | Multimodal, integrated with Google services | |

| LLaMA | Meta | Open source, efficient, customizable |

Transformer Architecture: The LLM Backbone

Key Innovation: Attention Mechanism

Transformers use “attention” to weigh the importance of different words when processing each word in a sentence, enabling better understanding of context and relationships.

Example: In “The animal didn’t cross the street because it was too tired,” the model learns that “it” refers to “animal” not “street” through attention.

Practical LLM Applications

💡 Content Creation

Blog posts, marketing copy, social media content, creative writing

💻 Code Generation

GitHub Copilot, code completion, debugging assistance

🎓 Education

Tutoring, personalized learning, explanation generation

🔍 Research & Analysis

Document summarization, data extraction, literature review

🏥 Healthcare

Medical image analysis, diagnosis support, patient communication

💼 Business

Customer service, email automation, report generation

Hands-On: Using Google Gemini for Medical Image Analysis

Python & Essential AI Frameworks

Beginner PythonWhy Python for AI?

Python’s Advantages for AI Development

- Simple & Readable Syntax: Easy to learn and write

- Rich Library Ecosystem: Comprehensive AI/ML libraries

- Platform Independent: Works on Windows, Mac, Linux

- Strong Community: Massive support and resources

- Excellent Documentation: Well-documented libraries

- Integration Friendly: Easily connects with other languages

- Rapid Prototyping: Quick experimentation and iteration

Essential Python Libraries for AI

| Library | Purpose | Use Cases |

|---|---|---|

| NumPy | Numerical computing | Array operations, linear algebra, mathematical functions |

| Pandas | Data manipulation | Data cleaning, analysis, CSV/Excel handling |

| Matplotlib | Data visualization | Creating plots, charts, graphs |

| Scikit-learn | Machine learning | Classification, regression, clustering, preprocessing |

| TensorFlow | Deep learning | Neural networks, computer vision, NLP |

| PyTorch | Deep learning | Research, dynamic neural networks, GPU acceleration |

| Keras | High-level DL API | Quick neural network prototyping |

| OpenCV | Computer vision | Image/video processing, object detection |

| NLTK / spaCy | NLP | Text processing, tokenization, POS tagging |

TensorFlow: Deep Dive

What is TensorFlow?

TensorFlow is Google’s open-source framework for building and deploying machine learning models at scale. It supports everything from research to production deployment.

Key Features:

- Flexible architecture for CPUs, GPUs, TPUs

- TensorFlow Lite for mobile/embedded devices

- TensorFlow.js for browser-based ML

- TensorFlow Extended (TFX) for production pipelines

- Keras API integrated for easy model building

TensorFlow Use Cases

- Computer Vision: Image classification, object detection, segmentation

- NLP: Sentiment analysis, translation, text generation

- Time Series: Stock prediction, weather forecasting

- Generative AI: GANs for image synthesis

- Recommendation Systems: Content and product recommendations

Building a Model with TensorFlow

Modern AI Frameworks & Tools

1. LangChain

Purpose: Framework for developing applications powered by LLMs

Features:

- Chain multiple LLM calls together

- Connect LLMs to external data sources

- Build conversational agents and chatbots

- Memory management for context retention

2. Langflow

Purpose: Visual low-code platform for building LangChain applications

Features:

- Drag-and-drop interface for AI workflows

- No coding required for basic applications

- Export to Python code when needed

- Rapid prototyping of AI solutions

3. Ollama

Purpose: Run LLMs locally on your machine

Features:

- Privacy-first (data stays on your device)

- Support for multiple open-source models (Llama, Mistral, etc.)

- Simple CLI interface

- No internet required after download

4. Hugging Face Transformers

Purpose: Library for state-of-the-art NLP models

Features:

- Pre-trained models for various NLP tasks

- Easy fine-tuning on custom data

- Support for PyTorch and TensorFlow

- Model hub with thousands of models

Getting Started Roadmap

Variables, data types, functions, loops, OOP basics

Array operations, data manipulation, CSV handling

Creating plots, understanding data visually

Build first ML models (regression, classification)

Neural networks, computer vision, NLP projects

LangChain, Hugging Face, specialized tools

AI Ethics & Responsible AI

Intermediate EthicsWhy AI Ethics Matter

As AI systems become more powerful and widespread, ensuring they’re fair, transparent, and beneficial is critical. Irresponsible AI can perpetuate bias, invade privacy, and cause real harm.

⚠️ Real-World AI Failures

- Hiring Algorithms: Amazon’s AI recruiting tool showed bias against women

- Facial Recognition: Higher error rates for people of color

- Healthcare AI: Algorithms prioritizing certain demographics

- Misinformation: Google Gemini generating historically inaccurate content

- Deepfakes: AI-generated fake videos used for fraud and harassment

The Five Pillars of Trustworthy AI

1. Fairness & Bias Mitigation

Goal: Ensure AI systems treat all individuals and groups equitably

Challenges:

- Training data reflects historical biases

- Algorithm design can amplify existing inequalities

- Proxy variables can encode protected attributes

Solutions:

- Audit training data for representation gaps

- Use fairness-aware algorithms

- Test models across demographic groups

- Implement bias detection and correction

- Diverse development teams

2. Transparency & Explainability

Goal: Make AI decision-making processes understandable

Why It Matters:

- Users deserve to know how decisions affecting them are made

- Debugging and improvement require understanding

- Legal compliance (GDPR “right to explanation”)

- Building trust with stakeholders

Techniques:

- LIME and SHAP for model interpretation

- Attention visualization in neural networks

- Decision tree surrogate models

- Feature importance analysis

3. Privacy & Data Protection

Goal: Safeguard personal information and prevent misuse

Best Practices:

- Data Minimization: Collect only necessary data

- Anonymization: Remove personally identifiable information

- Encryption: Protect data in transit and at rest

- Differential Privacy: Add noise to preserve individual privacy

- Federated Learning: Train models without centralizing data

- Access Controls: Limit who can access sensitive data

4. Robustness & Safety

Goal: Ensure AI systems are reliable and resilient to attacks

Threats:

- Adversarial Attacks: Malicious inputs designed to fool models

- Data Poisoning: Corrupting training data

- Model Extraction: Stealing proprietary models

Defenses:

- Adversarial training with perturbed examples

- Input validation and sanitization

- Regular security audits and penetration testing

- Monitoring for anomalous behavior

5. Accountability & Governance

Goal: Establish clear responsibility for AI systems

Framework Elements:

- Document model development, data sources, and decisions

- Establish AI ethics committees and review boards

- Create incident response plans

- Regular audits and impact assessments

- Continuous monitoring post-deployment

- Mechanisms for user feedback and appeals

Ethical AI Frameworks & Guidelines

| Organization | Framework/Principles |

|---|---|

| EU | AI Act – Risk-based regulation |

| OECD | AI Principles – Inclusive growth, human values |

| IEEE | Ethically Aligned Design |

| Partnership on AI | Collaborative best practices |

| AI Principles – Socially beneficial, avoiding bias |

Implementing Responsible AI in Practice

💡 Practical Ethics Checklist for AI Projects

- Have we identified potential harms and stakeholders?

- Is our training data representative and unbiased?

- Can we explain how our model makes decisions?

- Have we tested for fairness across different groups?

- Do we have mechanisms for user consent and control?

- Is there human oversight for critical decisions?

- Have we documented limitations and failure modes?

- Do we have a plan for monitoring and updates?

Becoming an AI Engineer in 2025

Career AdvancedThe AI Engineering Career Path

AI engineering is one of the fastest-growing and highest-paying tech careers. This roadmap will guide you from beginner to job-ready AI professional.

Essential Skills for AI Engineers

1. Foundation Skills (3-6 months)

Programming

- Python: Master syntax, data structures, OOP, libraries

- SQL: Database queries, data extraction

- Git/GitHub: Version control, collaboration

Mathematics

- Linear Algebra: Vectors, matrices, transformations

- Calculus: Derivatives, gradients, optimization

- Probability & Statistics: Distributions, hypothesis testing, Bayes theorem

Core Computer Science

- Data structures and algorithms

- Computational complexity

- System design basics

2. Machine Learning (3-6 months)

- Supervised learning (regression, classification)

- Unsupervised learning (clustering, dimensionality reduction)

- Model evaluation metrics

- Cross-validation and hyperparameter tuning

- Feature engineering

- Scikit-learn proficiency

3. Deep Learning (3-4 months)

- Neural network fundamentals

- CNNs for computer vision

- RNNs/LSTMs for sequences

- Transfer learning

- TensorFlow or PyTorch expertise

- GPU computing basics

4. Specialization Areas (Choose 1-2)

🗣️ Natural Language Processing

- Transformers & attention

- Fine-tuning LLMs

- Prompt engineering

- RAG (Retrieval Augmented Generation)

👁️ Computer Vision

- Object detection (YOLO, R-CNN)

- Image segmentation

- GANs for image generation

- Video analysis

🎨 Generative AI

- Diffusion models

- GANs and VAEs

- Text-to-image (Stable Diffusion)

- LLM fine-tuning

🤖 Reinforcement Learning

- Q-learning, DQN

- Policy gradients

- Multi-agent systems

- Robotics applications

5. Hot Skills for 2025

- Prompt Engineering: Crafting effective LLM prompts for optimal outputs

- AI Agents: Building autonomous systems that can plan and execute tasks

- MLOps: Deploying and maintaining ML models in production

- Edge AI: Running AI models on mobile/IoT devices

- Multimodal AI: Models that process text, images, audio together

- AI Security: Protecting models from adversarial attacks

- Ethical AI: Building fair, transparent, accountable systems

Building Your AI Portfolio

Project Ideas by Level

| Level | Project Ideas |

|---|---|

| Beginner |

• Iris flower classification • House price prediction • Sentiment analysis on tweets • Handwritten digit recognition (MNIST) |

| Intermediate |

• Image classifier for custom dataset • Chatbot using Hugging Face • Stock price predictor • Recommendation system • Object detection in videos |

| Advanced |

• Fine-tune LLM for domain-specific tasks • Build AI agent with LangChain • Multimodal search engine • Real-time facial recognition system • Deploy model to production with MLOps |

Portfolio Best Practices

- Host projects on GitHub with clear README files

- Include problem statement, approach, results

- Write blog posts explaining your projects (Medium, Dev.to)

- Create video demos (YouTube, Loom)

- Contribute to open-source AI projects

- Participate in Kaggle competitions

- Build a personal website showcasing your work

Learning Resources

Online Courses

- Coursera: Andrew Ng’s Machine Learning Specialization

- Fast.ai: Practical Deep Learning for Coders

- DeepLearning.AI: TensorFlow, NLP, MLOps specializations

- Udacity: AI Programming with Python Nanodegree

- edX: MIT’s Introduction to Deep Learning

Books

- Hands-On Machine Learning with Scikit-Learn, Keras & TensorFlow by Aurélien Géron

- Deep Learning by Ian Goodfellow, Yoshua Bengio, Aaron Courville

- Pattern Recognition and Machine Learning by Christopher Bishop

- Designing Data-Intensive Applications by Martin Kleppmann

Practice Platforms

- Kaggle: Competitions, datasets, notebooks

- LeetCode: Coding challenges

- HackerRank: AI/ML challenges

- Google Colab: Free GPU for experiments

Job Search Strategy

Where to Find AI Jobs

- LinkedIn (use keywords: “Machine Learning Engineer”, “AI Engineer”, “Data Scientist”)

- Indeed, Glassdoor, AngelList

- Company career pages (Google, Meta, OpenAI, Anthropic, etc.)

- AI-specific job boards (ai-jobs.net, deeplearning.ai careers)

- Networking: attend AI conferences, meetups, webinars

Preparing for AI Interviews

Common Interview Components

- Coding: Python, data structures, algorithms

- ML Theory: Concepts, algorithms, trade-offs

- Math: Linear algebra, calculus, probability

- System Design: ML pipelines, scalability

- Behavioral: Teamwork, problem-solving, past projects

Sample Interview Questions

Salary Expectations (2025 US Market)

| Role | Entry Level | Mid Level (3-5 yrs) | Senior (5+ yrs) |

|---|---|---|---|

| ML Engineer | $100k – $140k | $150k – $220k | $250k – $500k+ |

| AI Research Scientist | $120k – $160k | $180k – $280k | $300k – $600k+ |

| Data Scientist | $90k – $130k | $130k – $200k | $220k – $400k+ |

❓ Frequently Asked Questions

Retail: Personalized product recommendations, inventory optimization, demand forecasting, customer service chatbots, dynamic pricing, supply chain efficiency, fraud detection.

🚀 Your AI Journey Starts Now!

You’ve completed this comprehensive guide to artificial intelligence. You now have the roadmap, resources, and knowledge to begin your transformation into an AI professional. Remember:

Key Takeaways

- Start with fundamentals: Master Python, math, and basic ML before diving deep

- Learn by doing: Build projects constantly—theory without practice is useless

- Specialize strategically: Focus on 1-2 domains (NLP, CV, RL, etc.) to differentiate yourself

- Ethics matter: Always consider fairness, bias, and societal impact

- Stay current: AI evolves rapidly—continuous learning is essential

- Build in public: Share your work, contribute to open source, network

- Be patient: Mastery takes time, but consistent effort yields results

Next Steps

- Choose your first project from the beginner list

- Set up your development environment (Python, Jupyter, GitHub)

- Join an online AI community for support

- Dedicate 1-2 hours daily to learning and practicing

- Track your progress and celebrate small wins

💬 Remember

“The journey of a thousand miles begins with a single step. Your AI journey begins today. Stay curious, stay persistent, and welcome to the future!”