📊 Data Collection Cheat Sheet

Step 2: Gathering Relevant, Quality Data for Your Problem

🎯 What is Data Collection?

Data collection is the systematic process of gathering and measuring information on variables of interest to answer research questions, test hypotheses, and evaluate outcomes. Quality data collection is crucial for making informed decisions and building reliable machine learning models.

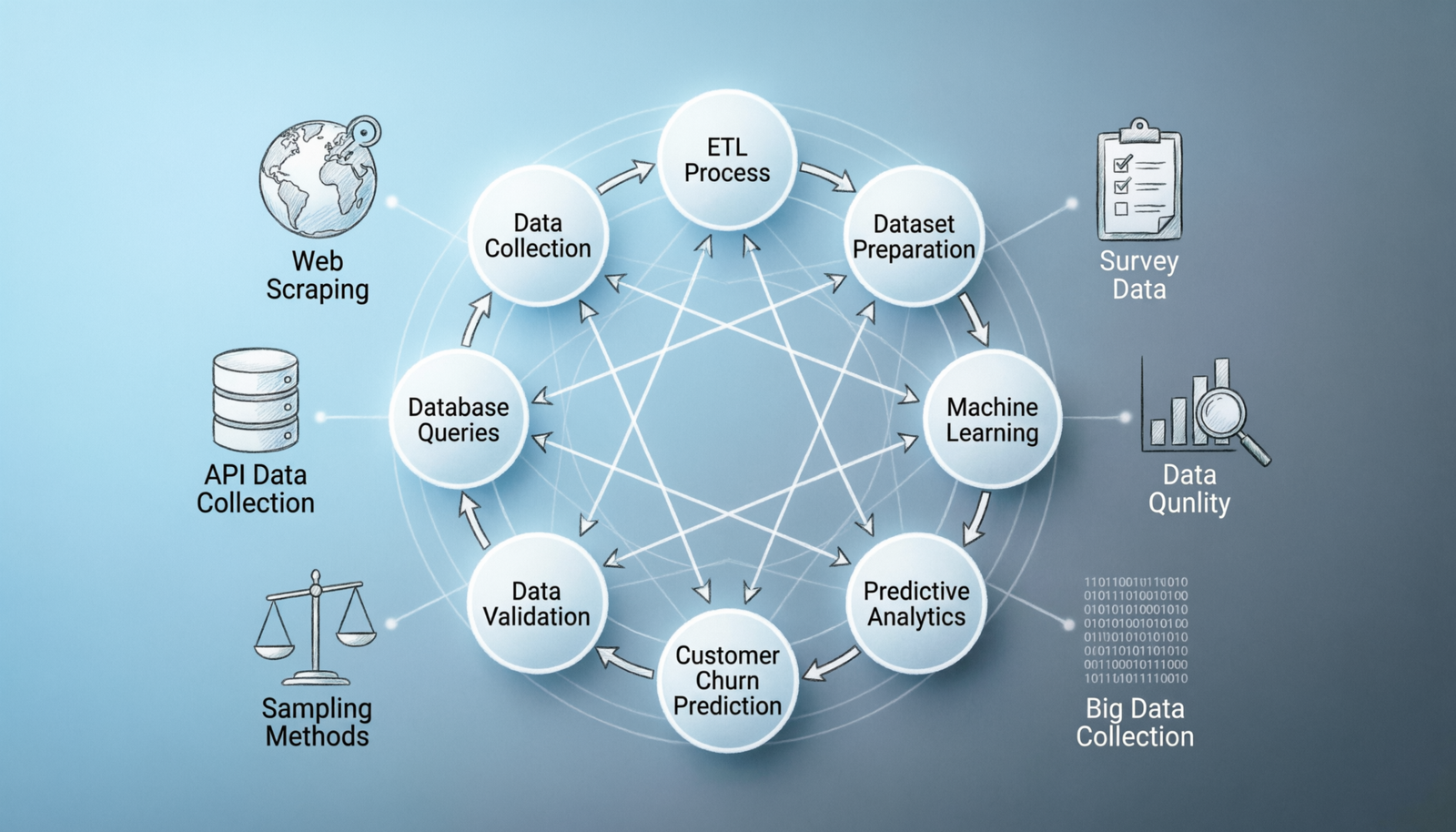

🔄 Data Collection Process

1. Define

What data do you need?

2. Identify

Where to find it?

3. Collect

Gather the data

4. Validate

Check quality

5. Store

Organize & secure

📚 Types of Data Sources

🔍 Primary Data

Data collected directly by you for your specific purpose

- Surveys & Questionnaires

- Interviews

- Experiments

- Observations

- Sensor Data

📖 Secondary Data

Data collected by others for different purposes

- Public Datasets

- Research Papers

- Government Statistics

- Commercial Databases

- Web Scraping

🌐 Web Data

Data available on the internet

- APIs (Twitter, Google, etc.)

- Web Scraping

- Social Media

- Public Archives

- Open Data Portals

🏢 Internal Data

Data from within your organization

- Database Records

- Transaction Logs

- CRM Systems

- Operational Data

- Historical Records

✅ Data Collection Checklist

Before Collection

- Define clear objectives and requirements

- Identify target population or data sources

- Determine sample size (if applicable)

- Choose appropriate collection methods

- Plan data storage and security measures

- Obtain necessary permissions and approvals

- Consider ethical implications and privacy

During Collection

- Follow standardized procedures consistently

- Document collection process and any issues

- Monitor data quality in real-time

- Handle missing or erroneous data appropriately

- Maintain data integrity and security

- Keep backups of collected data

After Collection

- Validate data completeness and accuracy

- Clean and preprocess data as needed

- Document metadata and data dictionary

- Store data securely with proper access controls

- Archive raw data for reproducibility

💡 Real-World Example: Customer Churn Prediction

🎯 Problem Statement

A telecommunications company wants to predict which customers are likely to cancel their subscription in the next 3 months to proactively offer retention incentives.

Step 1: Define Data Requirements

Based on domain knowledge and business understanding, identify what data might influence customer churn:

| Data Category | Specific Features | Why It Matters |

|---|---|---|

| Customer Demographics | Age, Gender, Location, Income Level | Different segments have different churn patterns |

| Account Information | Tenure, Contract Type, Payment Method | Longer tenure usually means lower churn |

| Service Usage | Monthly Data Usage, Call Minutes, SMS Count | Usage patterns indicate engagement |

| Billing Data | Monthly Charges, Total Charges, Payment History | Price sensitivity affects churn |

| Support Interactions | Number of Support Tickets, Resolution Time | Poor service quality drives churn |

| Target Variable | Churned (Yes/No) | Historical churn status for training |

Step 2: Identify Data Sources

Internal Sources:

- CRM Database: Customer demographics, account details, contract information

- Billing System: Monthly charges, payment history, outstanding balances

- Network Usage Logs: Data usage, call records, SMS logs

- Customer Support System: Ticket history, complaint records, resolution times

- Marketing Database: Promotional offers received, campaign responses

External Sources (Optional):

- Competitor Analysis: Market pricing data, competitor offers

- Economic Indicators: Regional unemployment rates, economic conditions

- Census Data: Demographic information by region

Step 3: Data Collection Plan

Sample Collection Script (Python):

import pandas as pd

import sqlalchemy as db

# Connect to internal databases

engine = db.create_engine('postgresql://user:password@localhost/telecom')

# Query customer data

query_customers = """

SELECT

customer_id,

age,

gender,

location,

tenure_months,

contract_type,

payment_method,

monthly_charges,

total_charges,

churned

FROM customers

WHERE signup_date >= '2023-01-01'

"""

# Query usage data

query_usage = """

SELECT

customer_id,

AVG(data_usage_mb) as avg_data_usage,

AVG(call_minutes) as avg_call_minutes,

AVG(sms_count) as avg_sms_count

FROM usage_logs

WHERE log_date >= CURRENT_DATE - INTERVAL '3 months'

GROUP BY customer_id

"""

# Query support data

query_support = """

SELECT

customer_id,

COUNT(*) as num_support_tickets,

AVG(resolution_hours) as avg_resolution_time

FROM support_tickets

WHERE ticket_date >= CURRENT_DATE - INTERVAL '6 months'

GROUP BY customer_id

"""

# Collect data

df_customers = pd.read_sql(query_customers, engine)

df_usage = pd.read_sql(query_usage, engine)

df_support = pd.read_sql(query_support, engine)

# Merge datasets

df_complete = df_customers.merge(df_usage, on='customer_id', how='left')

df_complete = df_complete.merge(df_support, on='customer_id', how='left')

# Save to file

df_complete.to_csv('customer_churn_data.csv', index=False)

print(f"Collected data for {len(df_complete)} customers")

print(f"Features: {df_complete.columns.tolist()}")

print(f"Churn rate: {df_complete['churned'].mean():.2%}")

Step 4: Data Quality Validation

Quality Checks Performed:

| Check Type | What to Verify | Action if Failed |

|---|---|---|

| Completeness | Missing values percentage < 5% | Impute or collect more data |

| Accuracy | Values within expected ranges | Investigate and correct errors |

| Consistency | No contradictory information | Cross-reference and resolve |

| Uniqueness | No duplicate customer records | Remove or merge duplicates |

| Timeliness | Data is current and relevant | Collect more recent data |

Step 5: Results Summary

Dataset Statistics:

- Total Records: 50,000 customers

- Time Period: January 2023 – December 2024

- Features: 18 variables (15 predictors + 1 target + 2 identifiers)

- Churn Rate: 26.5% (13,250 churned customers)

- Missing Data: 2.3% average across all features

- Data Quality Score: 94/100

🎓 Best Practices

✓ Do’s

- Start with a clear plan: Know exactly what data you need and why

- Document everything: Keep detailed records of data sources, collection methods, and any transformations

- Collect more than you think you need: It’s easier to filter out data than to collect it again later

- Validate continuously: Check data quality at every stage, not just at the end

- Maintain data lineage: Track where data came from and how it was processed

- Use version control: Keep track of different versions of your dataset

- Consider privacy and ethics: Anonymize sensitive data and comply with regulations

- Balance your dataset: Ensure adequate representation of all classes/categories

✗ Don’ts

- Don’t assume data quality: Always validate, even from trusted sources

- Don’t collect data you don’t need: Unnecessary data increases storage costs and privacy risks

- Don’t ignore missing data: Understand why data is missing before deciding how to handle it

- Don’t mix incompatible sources: Ensure data from different sources uses consistent definitions

- Don’t forget about bias: Selection bias can invalidate your entire analysis

- Don’t skip documentation: Future you will thank present you

- Don’t violate privacy laws: GDPR, CCPA, and other regulations have serious penalties

🛠️ Common Tools & Platforms

| Tool/Platform | Use Case | Key Features |

|---|---|---|

| Kaggle | Finding public datasets | Large collection, community-vetted, competitions |

| Google Dataset Search | Discovering datasets online | Search engine for datasets across the web |

| AWS S3 | Storing large datasets | Scalable, durable, integrates with ML tools |

| Apache Kafka | Real-time data streaming | High throughput, distributed, fault-tolerant |

| Beautiful Soup | Web scraping | Python library, easy to use, handles HTML/XML |

| PostgreSQL | Structured data storage | Reliable, ACID compliant, good for analytics |

| MongoDB | Unstructured/semi-structured data | NoSQL, flexible schema, horizontally scalable |

| Google Forms/SurveyMonkey | Surveys and questionnaires | Easy setup, automatic data collection, analytics |

⚠️ Common Pitfalls to Avoid

⚠️ Sampling Bias

Your sample doesn’t represent the population

Solution: Use random sampling or stratified sampling techniques

⚠️ Data Leakage

Including information that wouldn’t be available in production

Solution: Carefully review features and their temporal relationship

⚠️ Insufficient Data

Not enough examples to train a robust model

Solution: Collect more data or use data augmentation techniques

⚠️ Outdated Data

Using old data for a problem that has evolved

Solution: Regularly update datasets and retrain models

📊 Data Quality Metrics

| Metric | Description | Target |

|---|---|---|

| Completeness | Percentage of non-missing values | > 95% |

| Accuracy | Correctness of data values | > 98% |

| Consistency | Data uniformity across sources | 100% |

| Timeliness | Data freshness and relevance | < 24 hours old |

| Validity | Conformance to defined formats | 100% |

| Uniqueness | No unintended duplicates | 100% |

🎯 Key Takeaways

- 🎯 Quality over Quantity: 1,000 high-quality labeled examples beat 10,000 noisy ones

- 📋 Plan Before Collecting: A clear data collection plan saves time and resources

- 🔍 Validate Early and Often: Catching data issues early prevents wasted effort

- 📝 Document Everything: Future you (and your team) will appreciate it

- 🔒 Privacy First: Always consider ethical implications and legal requirements

- 🔄 Iterate: Data collection is rarely perfect on the first try

- 🤝 Collaborate: Work with domain experts to ensure data relevance