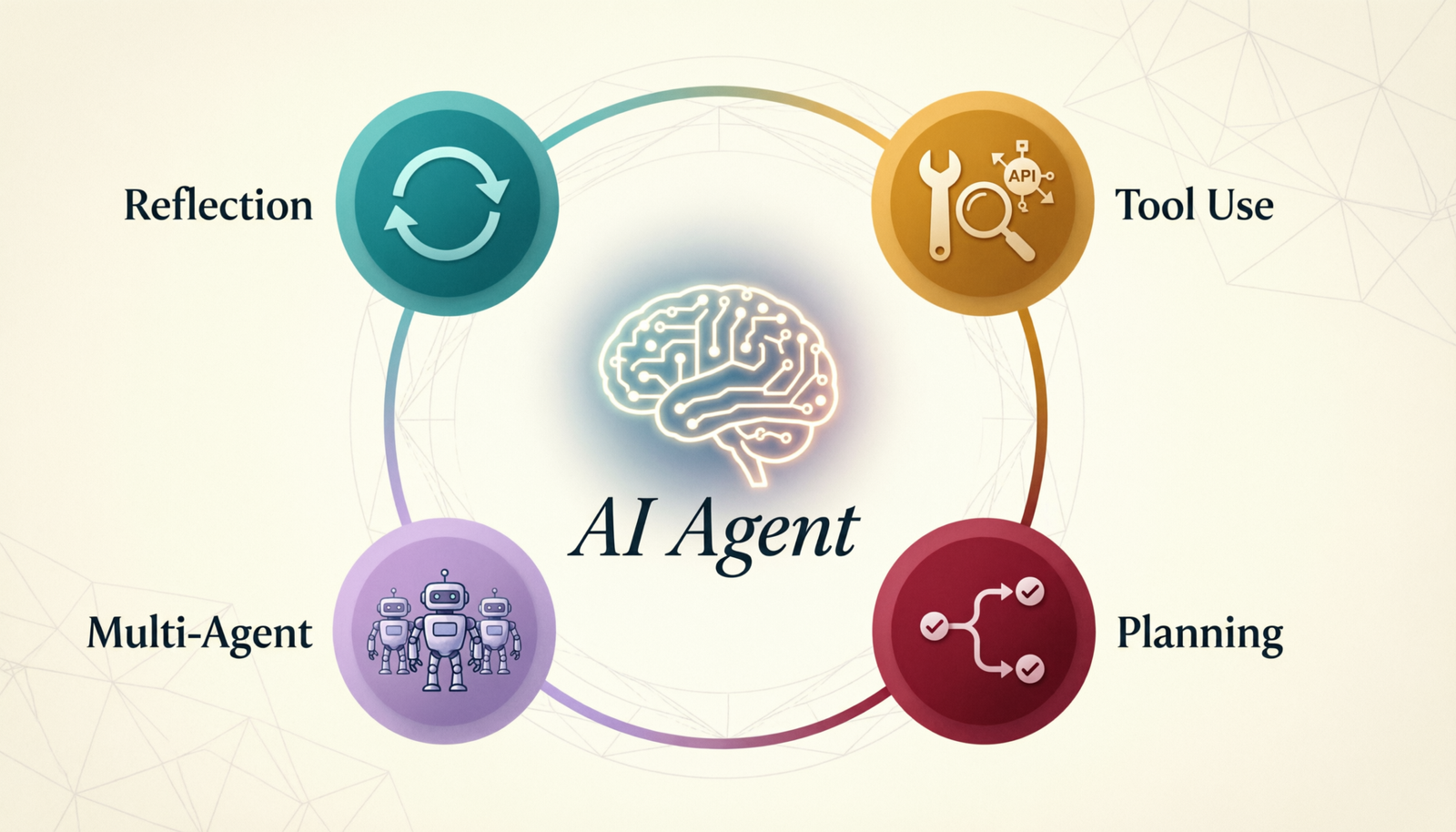

AI Agentic Design Patterns

Four foundational patterns that power modern AI agents — from self-correction loops to multi-agent collaboration.

Reflection

The agent evaluates its own output and iteratively improves it. After generating an initial response, it critiques quality, identifies errors, and refines — looping until satisfied or a threshold is reached.

An AI writes code, runs it, sees the error, critiques its own logic, rewrites, and retests — all autonomously.

Tool Use

The agent is equipped with external tools — web search, calculators, APIs, databases — and decides when and how to call them to gather real information beyond its training data.

An agent searches the web for stock prices, calls a weather API, then synthesizes results into a travel recommendation.

Planning

Given a complex goal, the agent breaks it into sub-tasks, sequences them strategically, and executes step by step. Includes techniques like ReAct (Reason + Act) and Chain-of-Thought prompting.

Asked to “launch a product page,” the agent plans: research → copy → design spec → code → deploy — executing each phase.

Multi-Agent

Multiple specialized AI agents collaborate, each handling a domain. An orchestrator agent delegates to sub-agents, aggregates their outputs, and resolves conflicts — like a team of experts.

A research agent, a writing agent, and a fact-checker agent all work together — each doing what it’s best at.

| Pattern | Core Idea | Best For | Key Challenge | Example Frameworks |

|---|---|---|---|---|

| Reflection | Self-evaluate and iterate | Code generation, writing quality | Knowing when to stop | LangChain, Reflexion |

| Tool Use | Extend with external tools | Real-time data, calculations | Tool selection & errors | OpenAI Functions, LangGraph |

| Planning | Decompose & sequence tasks | Multi-step workflows | Plan drift, long horizons | AutoGPT, ReAct, BabyAGI |

| Multi-Agent | Specialized agents collaborate | Large, parallel tasks | Communication overhead | CrewAI, AutoGen, MetaGPT |